Last updated on: 25th Dec 2014

Solaris 11.2 deprecates the zfs_arc_max kernel parameter in favor of user_reserve_hint_pct and that’s cool.

tl;dr

ZFS has a very smart cache, the so called ARC (Adaptive replacement cache). In general the ARC consumes as much memory as it is available, it also takes care that it frees up memory if other applications need more.

In theory, this works very good, ZFS just uses available memory to speed up slow disk I/O. But it also has some side effects, if the ARC consumed almost all unused memory. Applications which request more memory need to wait, until the ARC frees up memory. For example, if you restart a big database, the startup is maybe significantly delayed, because the ARC could have used the free memory from the database shutdown in the meantime already. Additionally this database would likely request large memory pages, if ARC uses just some free segments, the memory gets easily fragmented.

That is why, many users limit the total size with the zfs_arc_max kernel parameter. With this parameter you can configure the absolute maximum size of the ARC in bytes. For a time I personally refused to use this parameter, because it feels like “breaking the legs” of ZFS, it’s hard to standardize (absolute value) and it needs a reboot to change. But for memory-intensive applications this hard limit is simply necessary, until now.

Solaris 11.2 finally addresses this pain point, the zfs_arc_max parameter is now deprecated. There is the new dynamic user_reserve_hint_pct kernel parameter, which allows the system administrator to tell ZFS which percentage of the physical memory should be reserved for user applications. Without reboot!

So if you know your application will use 90% of your physical memory, you can just set this parameter to 90.

Oracle provides a script called set_user_reserve.sh and additional documentation. Both can be found on My Oracle Support: “Memory Management Between ZFS and Applications in Oracle Solaris 11.2 (Doc ID 1663862.1)”. The script gracefully adjusts this parameter, to give ARC enough time to shrink.

According first tests it works really nice:

# ./set_user_reserve.sh -f 50 Adjusting user_reserve_hint_pct from 0 to 50 08:43:03 AM UTC : waiting for current value : 13 to grow to target : 15 08:43:11 AM UTC : waiting for current value : 15 to grow to target : 20 ... # ./set_user_reserve.sh -f 70 ... # ./set_user_reserve.sh 0

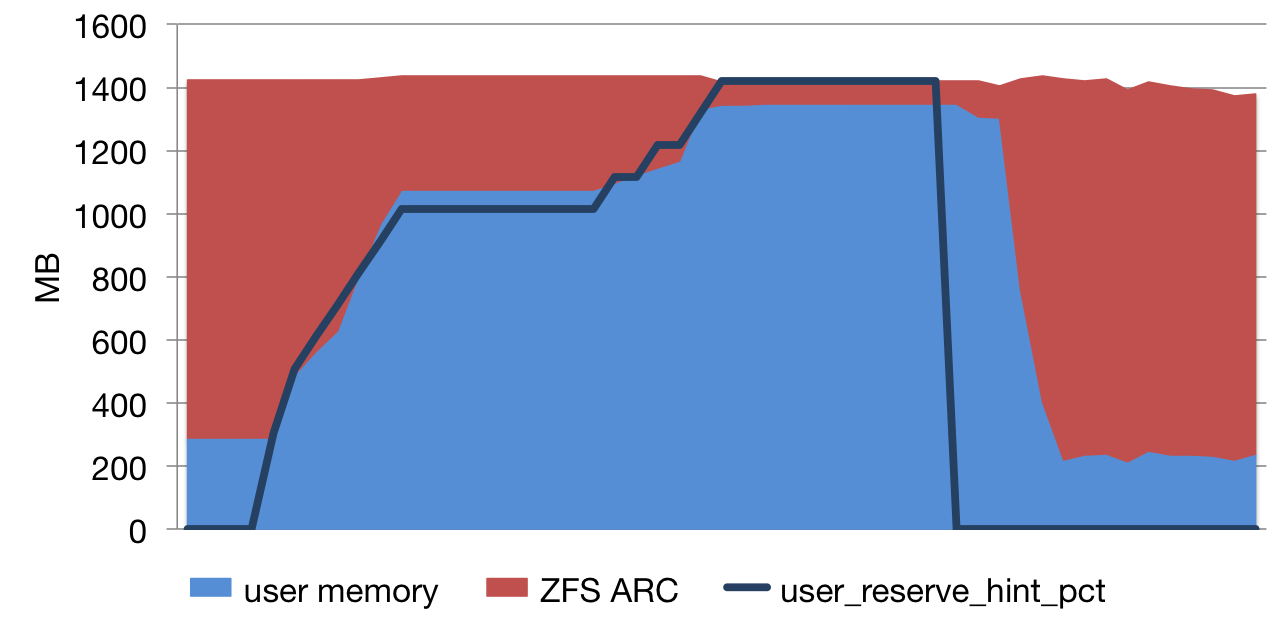

The following chart shows the memory consumption of the ZFS ARC and available memory for user applications during my test. The line graph is the value of user_reserve_hint_pct which is gracefully set by the script. During the test, I set it to 50%, 70% and back to 0%. At the same time I generated some I/O on the ZFS filesystem to cause caching to ARC.

As you can see, the ARC shrinks and grows according to the new parameter. The 70% reservation could never be reached, because my test system (Virtualbox) did not have enough physical memory.

For generating the chart data, I wrote the following Dtrace script:

1 | #!/usr/sbin/dtrace -s |

You can run the script during adjusting user_reserve_hint_pct with your desired interval, for example every ten seconds:

# ./zfs_user_reserve_stat.d 10

PHYS ARC USR avail (MB) USR(%) user_reserve_hint_pct (MB) user_reserve_hint_pct (%)

2031 1136 288 14 0 0

2031 1051 364 17 304 15

2031 934 489 24 507 25

2031 863 561 27 609 30

2031 798 627 30 710 35

...

I definitely need to play more with this parameter, but so far it looks like a big improvement to zfs_arc_max and a very good replacement. Does it also solves your pain with the ZFS ARC? Feel free to leave a comment.

Update 10th Aug 2014

- As pointed out by Martin in the comments, changing the limit dynamically for the ARC was already possible before the arrival of this new parameter. But because

zfs_arc_maxis clearly defined as non-dynamic in the Solaris Tuneable Parameters Reference guide, I think it is not officially supported and could have some side effects. - According first tests, it seems the new Kernel Zones (KZ) still need the setting of

zfs_arc_max. I got theFailed to create VM: Not enough spaceerror during the start of the KZ and shrinking the ARC withuser_reserve_hint_pctdid not help. In my opinion this is still a bug in the KZ implementation.

Update 25th Dec 2014

- According my latest information, KZ sometimes fail to use the freed space from

user_reserve_hint_pctbecause KZ need a continous large free memory segment, which likely is not available.